An IoT Piggy Bank you can talk to

Last year I wrote a blog post about an internet-connected piggy bank that I made. Kids could shake the pig and it would tell them how much money was in an online bank account.

It gave me the idea that it would be nice if kids could talk back to the pig and ask it questions. And so I set to work on a new version. This article explains what Pig eBank 2 does, I’ll share a few learnings from prototyping it and I’ll consider how it relates to the idea of open banking.

What Pig eBank 2 does

The Pig sits at home providing a physical and interactive link to a child’s online bank account. Here’s an overview of some of things it can do.

Alerts

Much like Pig eBank 1, when there is a transaction — for example, if the child receives pocket money — the pig alerts the child using lights and by pointing its ears up.

In the video above, the pig is connected to a dummy account, with parents using a prototype app. But I also connected the Pig to my Monzo account. As well as providing real transactional events, the data it provided meant that it could be queried, as I’ll explain next.

Ask questions

Version 1 of the Pig provided the same information whenever it was shaken (saving, spending and balance). Version 2 makes the conversation two-sided. You can ask it questions like:

“How much money do I have?”

“How much have I spent this week?”

“How much did I save last month?”

Natural language understanding means you don’t have to use those exact phrases, so you might say“What’s my balance”or“How much have I saved”. Being able to query finances in your own language means you can get the information you care about, in a more human way. This hopefully makes finances much more accessible for children.

Set goals and track them

The new Pig allows you to set a savings goal:

“I want to save for a bike”.

You can then track that goal:

“How much more do I need to save?”.

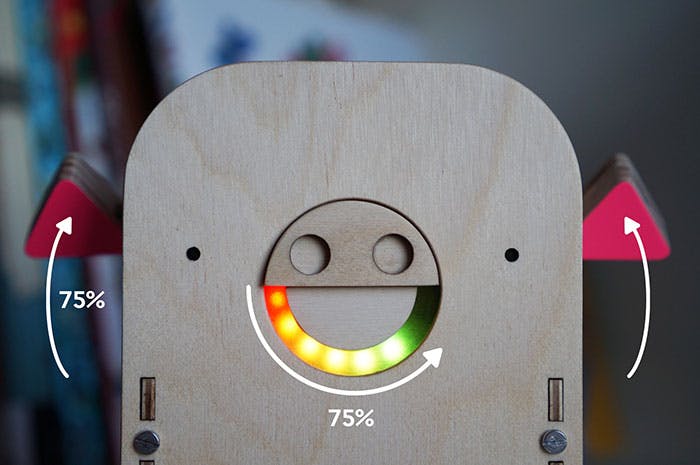

The pig verbally tells you how you’re progressing and you also get feedback through lights and the rotation of the pigs ears.

In fact the ears don’t just indicate progress when you ask the pig. Whenever it is inactive the rotation of the ears provides a glanceable overview of how close to your goal you are.

The pig’s ears provide a glanceable view of your progress towards a savings goal, from anywhere in the room.

In Enchanted Objects David Rose discusses ‘Glanceability’ and how the brain can process information before you fully pay attention to it. This is something cognitive scientists call pre-attentive processing. He argues that because glanceable objects are “delightfully non-distracting” they are more likely to be viewed instinctively throughout the day and this can lead to behaviour change. He provides examples from energy monitoring, but perhaps a glanceable view of finances might encourage children to save?

A few learnings

1. The value of a dedicated device

Using a dedicated device as a service channel provides a number of advantages that aren’t possible with something like Amazon Echo. You can:

- Include custom functionality — like alerts.

- Create more personality through form, light and mechanical movement.

- Provide glanceable information (as discussed above).

- Remind people about your service to encourage interaction. This is related to Donald Norman’s idea of “information in the world” in The Design of Everyday Things. If your service is hidden inside an echo people have to remember themselves that they can access it — the knowledge is “in their head”.

- Enable a potentially more natural interaction: There is a specific physical representation of the service and it becomes anthropomorphised. Conversing with it then feels less anonymous than speaking to a generic black cylinder.

As it becomes cheaper to embed voice processing into products, maybe we’ll start to see the Internet of Conversational Things!

2. Design for mistakes

Designing for voice (rather than screens) means you have to focus much more on errors — people can say anything and they can also be misheard.

There are a few strategies to deal with this. I used a mixture of enabling undo (if there is a change to the system), doing nothing(for queries), and upfront confirmation (if there is high chance of being misheard). If using confirmations, I tried to group them together so they didn’t get annoying.

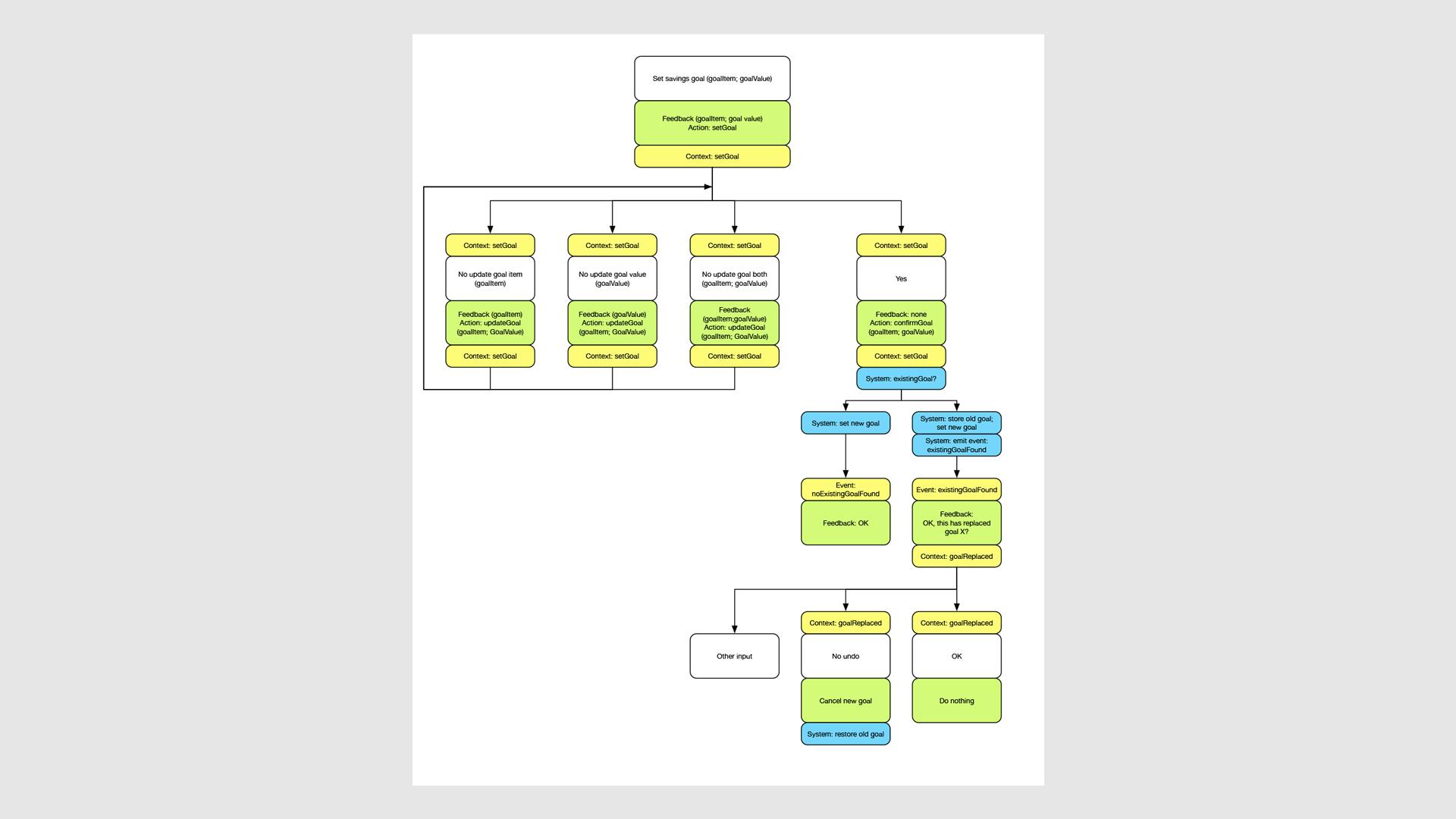

I used api.ai to handle the logic around errors, but to help me figure out how these strategies should work, I had to crack open omnigraffle and map out the flows. This also helped me to check all errors had been catered for.

3. Communicate state

A voice system by itself doesn’t provide any feedback on what’s happening (aside from when it’s talking): is it listening? is it thinking? has it finished talking? This makes it hard to converse with and is even more apparent if it takes a while for the cloud services to respond.

Amazon Echo and Google Home have a set of additional noises and light patterns to provide cues as to what’s happening. I developed my own set of cues using light and also the movement of the ears.

Voice is great at uncovering user needs

Once I had finished my prototype I tested it out with my 4 yr old son (younger than my target users). He tried out the things I showed him it could do, but then he said “How much do I need to save to go camping?”. It surprised me. I hadn’t considered that when setting a savings goal, kids might not have an idea of what something costs and I definitely didn’t consider that the goal might not be a single specific thing with a fixed cost, but an activity made up of multiple, variable costs.

Designing in this functionality is an interesting challenge, and I’m thinking of ways to at least handle it gracefully even if a proper answer can’t be provided. However, the story illustrates an interesting characteristic of voice interfaces. They don’t offer users clear boundaries as to what they can do — there’s a lack of affordances. This means users can ask for what they really want, rather than being limited by the buttons on a screen.

So voice interfaces may provide a fast-track to understanding customer needs. But someone needs to listen to these requests and the technology needs to log what customers are asking for so that this can be analysed and acted upon.

A note on open banking

The Pig is connected to a real banking service — Monzo, using their API. PSD2 will enable third parties to use similar ‘Open’ APIs to build applications and services around all financial institutions. This means the Pig could in theory be connected to any bank account in the future.

I think this hints at a different kind of opportunity for what PSD2 can enable. Rather than omniscient dashboards that aggregate your financial world, maybe we will see very niche banking services?

It is unlikely that anyone is going to set up a bank just to create a service helping children save money, but instead they could ‘piggy-back’ (sorry!) on existing banking infrastructure. In this scenario banking becomes simplified and focussed for key audiences and use cases. What other niche audiences and needs are there that small, specific and simple banking services could be created for?

Thanks for reading

As with the previous article, it would be great to hear your thoughts, questions, suggestions.