Toilet seats & senior citizens – experiments with image recognition technology

Our Financial Services team has been experimenting with some of the technologies currently transforming how insurance is bought and delivered. Our brief – how might we use emerging technology to reimagine insurance for consumers?

Avoiding white elephants

These are heady times in FS and it's all too easy to embrace new technologies without really understanding what benefit they will bring. Some will help, others will transform, and inevitably many will prove expensive solutions in search of problems.

The challenge is of course being able to tell the difference. But where to start? One of the technologies I've been looking into is digital image recognition.

Among the key trends we see in insurance are moves towards tailored policies (where the risk is modelled on measured, personal data) and the emergence of just-in-time cover and dynamic pricing.

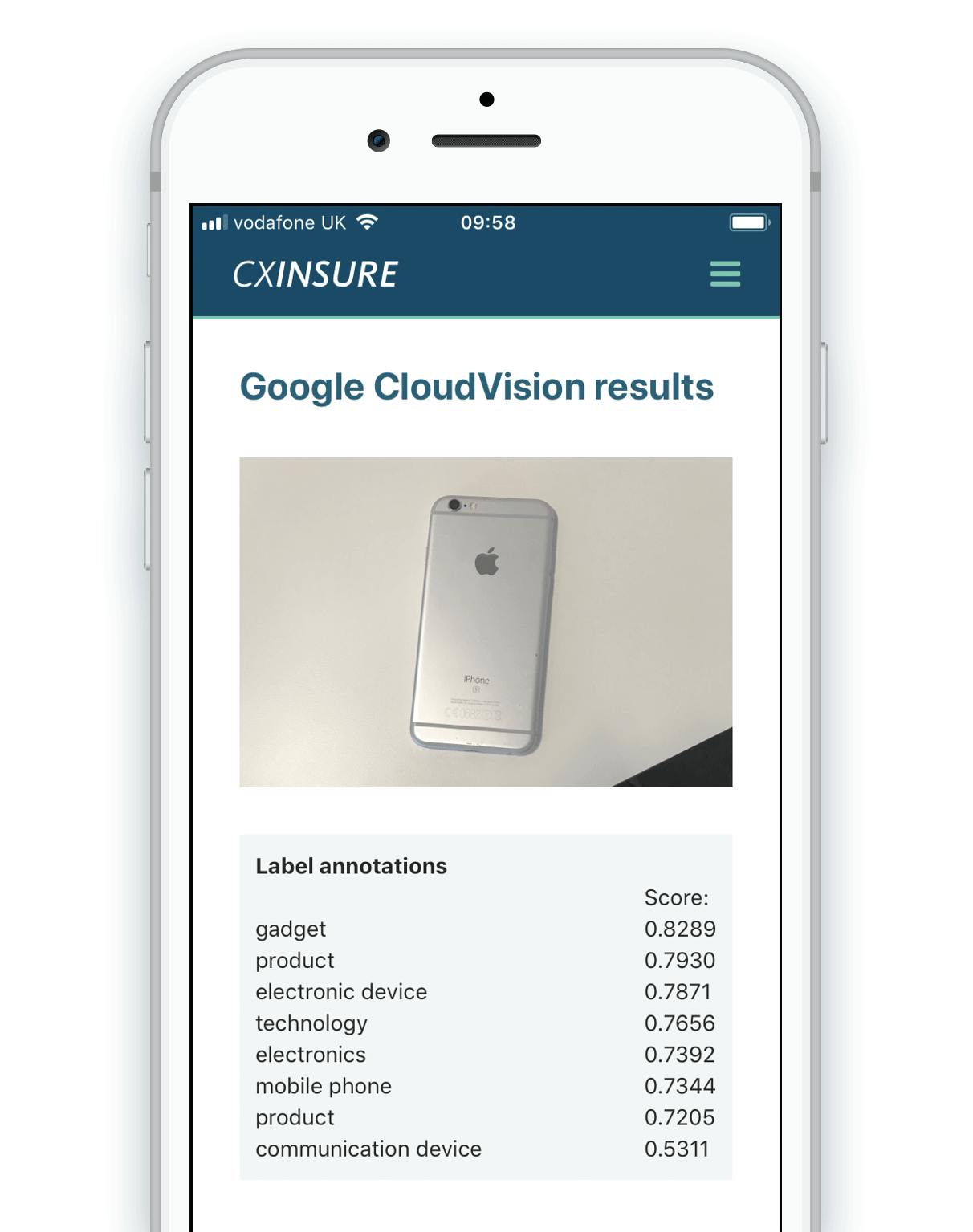

With that in mind, I wanted to test some of the ways that a mobile phone's camera could be used to improve in-app customer journeys. Our first experiment was quickly insuring personal possessions using the camera as an optical scanner.

Prototyping quickly with cloud services

In the age of open platforms, machine learning through AI has never been more accessible, with a plethora of APIs instantly available to the innovative product team.

Notable image analysis platforms include Clarifai, Google Cloud Vision and Amazon Rekognition. We chose Google for our experiment:

Google Cloud Vision API enables developers to understand the content of an image by encapsulating powerful machine learning models in an easy to use REST API.

Our first objective was to test this claim. We quickly integrated the service into an existing prototype – a native iOS app bootstrapped using Ionic and Cordova – and started taking photos!

Did it work?

This was a quick test, evaluating the service for a specific scenario:

- To be worthwhile this feature would need to feel more convenient than typing into a form.

- To succeed, the identification would need to be specific and accurate enough that no manual input was needed.

In that context the result was a resounding "no". We failed fast!

Consistency

In some set-piece scenarios the results were impressively accurate. But we needed more than that – we needed them to be useful.

Our real hope was product and brand recognition, but the service tended towards general characteristics over exact identification. Perhaps this isn’t a real surprise with the current trend for minimally branded industrial design, where less is often more.

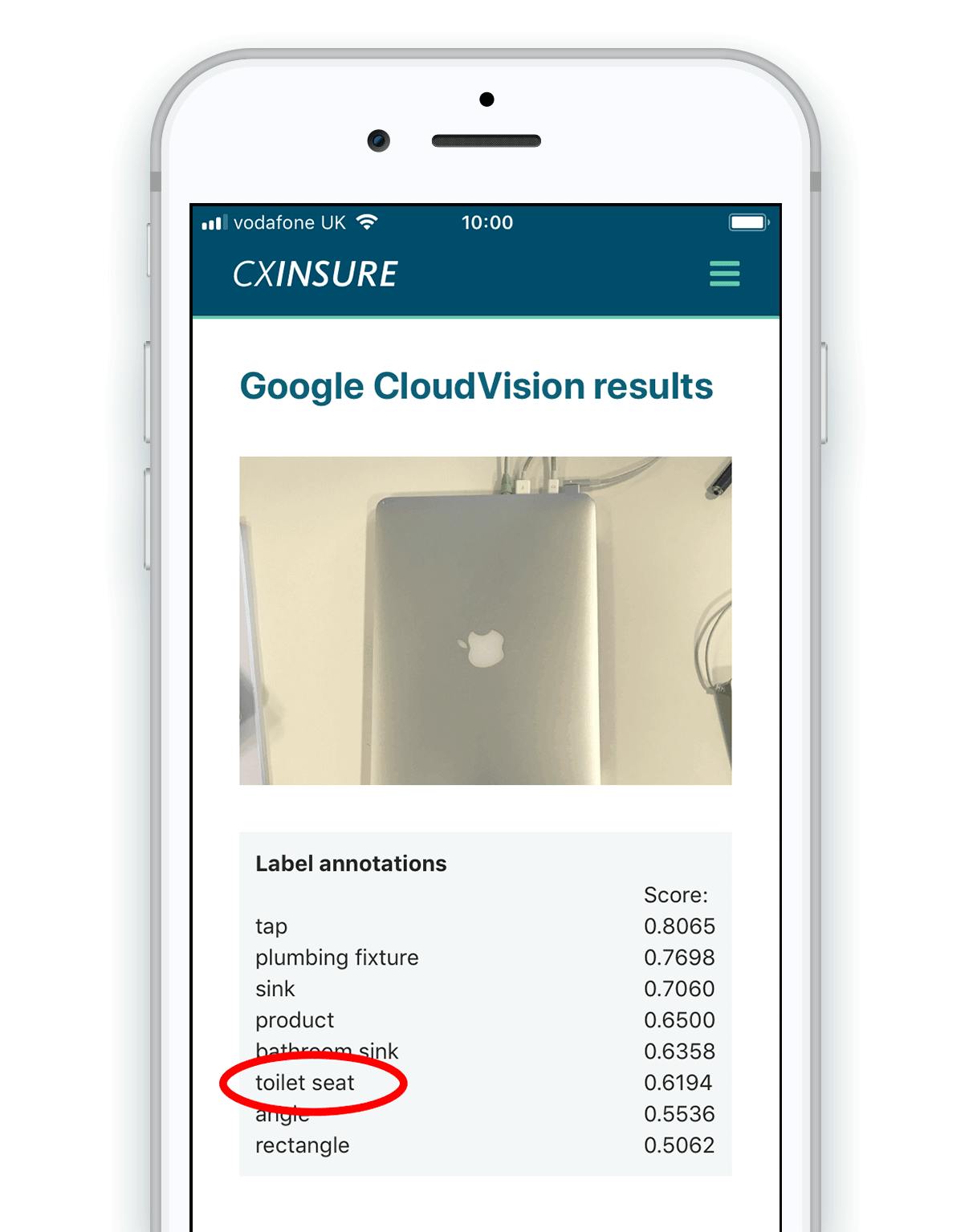

And just a few variables could cause things to go south quite quickly. It would often appear to be hampered by background objects and scenery. Are those cables connected to a laptop, or pipes attached to something in your bathroom?

Speed

Speed is a feature, and the lack of it can break the user experience. While admittedly we did nothing to try and improve waiting times in our tests, delays in the 5-10 second range were common. That’s a frustratingly long time to stare at a screen while wishing you could just type into a form field.

A is for...

Logo recognition went pear-shaped, despite accurate implementation of the service.

Sensitivity

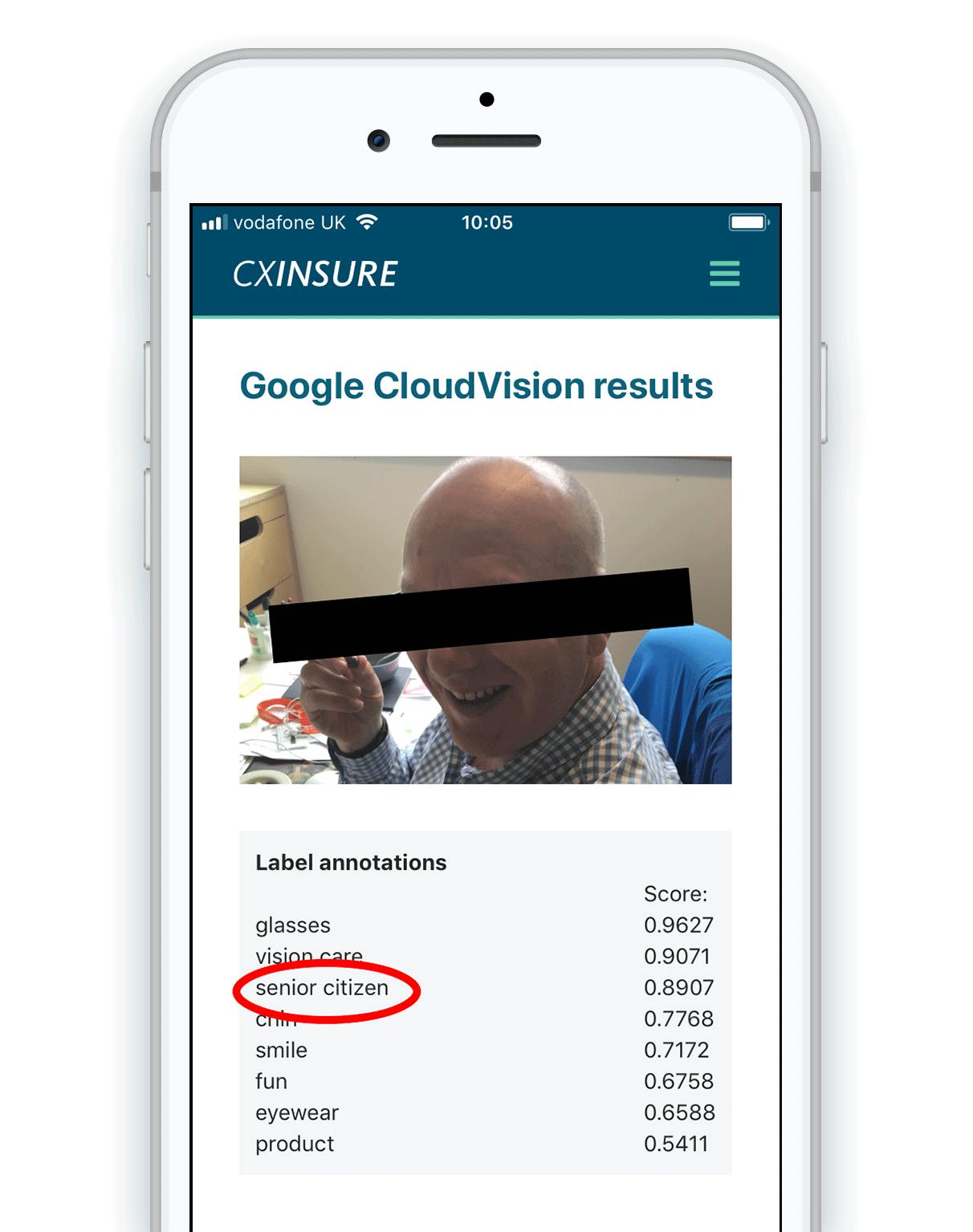

Maturing AI will get things wrong. But while it’s OK when it thinks psychedelic toasters are bananas and rifles are helicopters, humans are more easily offended. A colleague in our finance team caught in the crossfire of our experiment was awkwardly mis-identified.

Thankfully they were good-humoured and I hope to still get paid this month. But it’s a reminder that misuse is a thing to take seriously when implementing image recognition for real.

Conclusion

While Google Vision is impressive in its ability to detect scene objects and recognise facial expressions, product identification proved lacking.

It’s worth reiterating that we were using real-time image data which had not seen the light of the internet as thus wasn’t cached in Google or with any associated metadata.

Maybe we need to think more laterally about using the available data to streamline the user journey. But without reliable brand or model recognition our original vision was somewhat hampered.

Early last year, ThoughtWorks’ Technology Radar advised a cautious but inquisitive approach to the adoption of "Cloud-based image comprehension" and has yet to change its stance, defining it as:

Worth exploring with the goal of understanding how it will affect your enterprise.

And that largely tallies with our experience. But regardless, the platform is super impressive and we’ll be watching its development closely.

This is just a start for us (next we’ll be testing Clarifai and its logo detection) and we wanted to share our findings, but we’d love to hear your thoughts and experiences.